Lÿnx Backlink Verification Utility

Lÿnх: The Ultimate Backlink Verification Utility for Web Developers

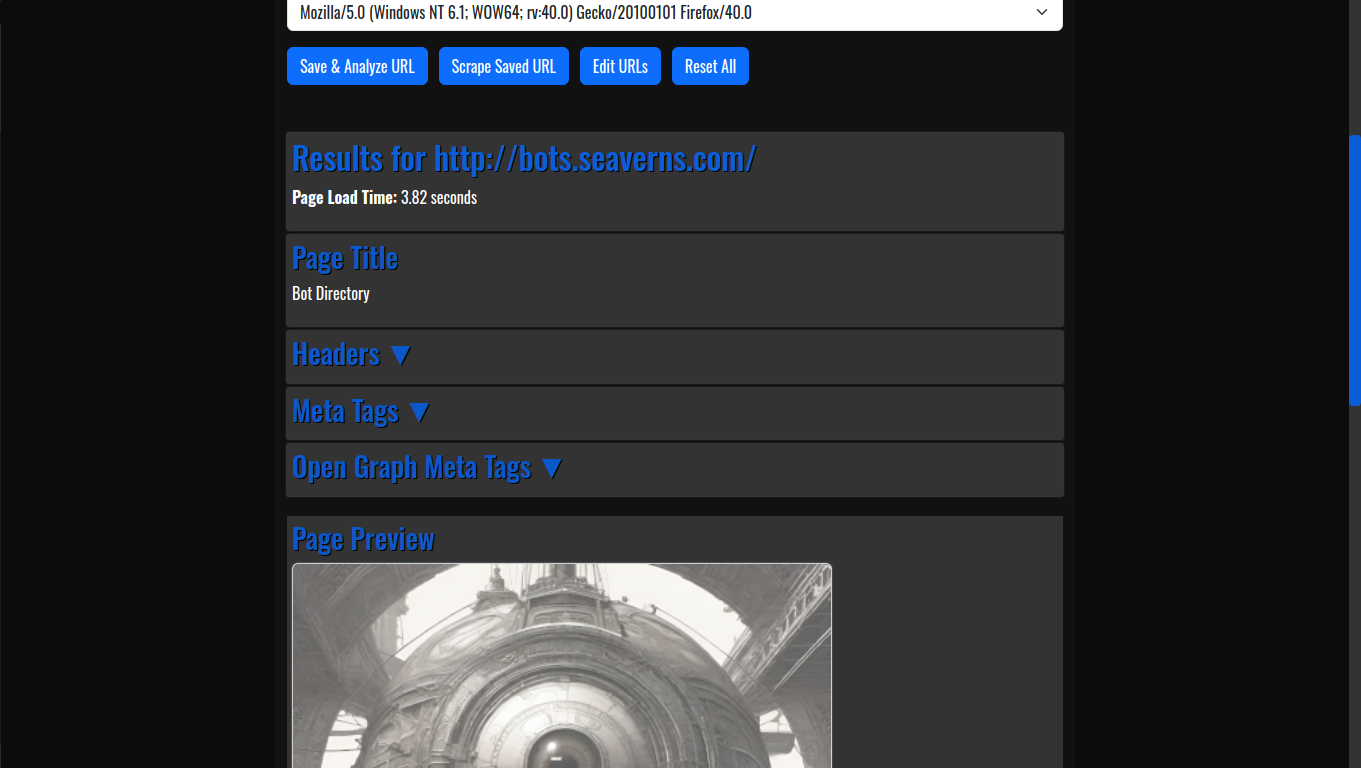

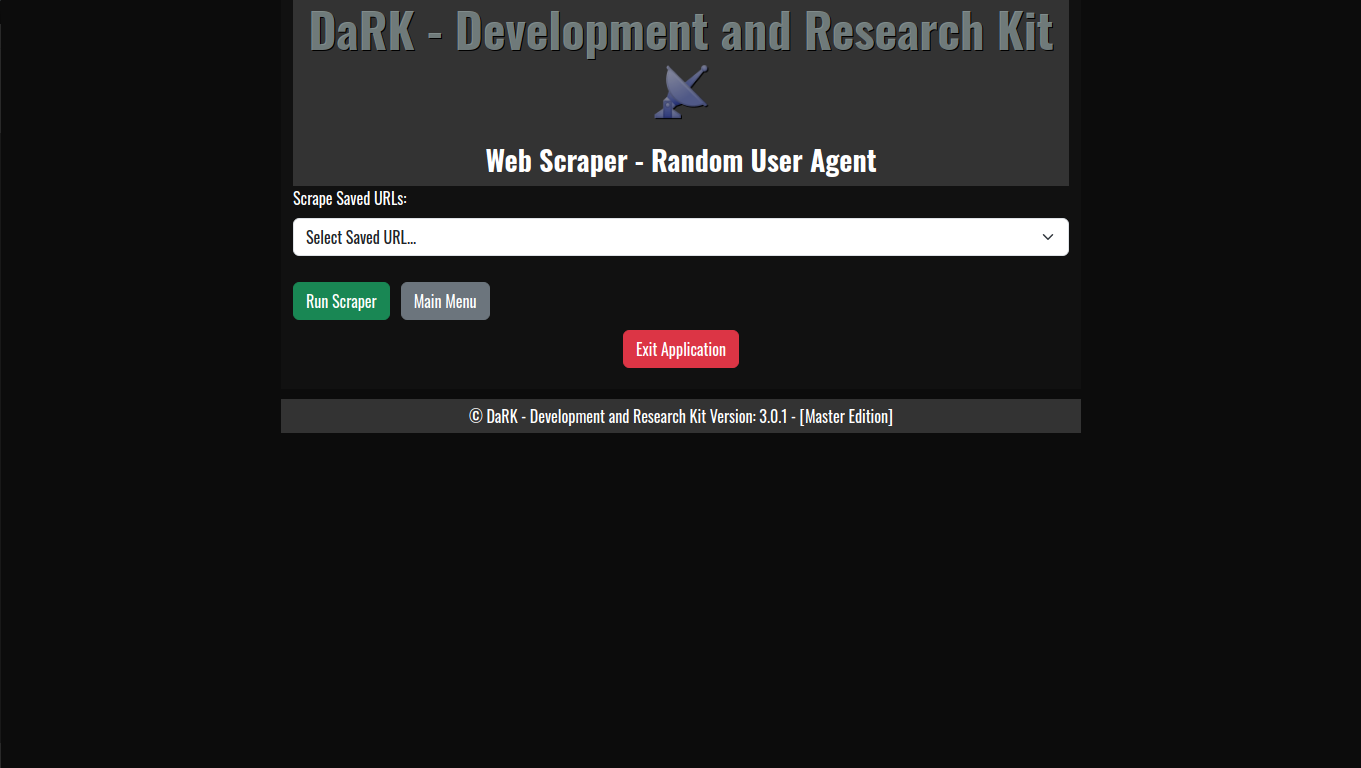

In today’s digital landscape, web development and search engine optimization (SEO) are inseparable. A major part of SEO involves verifying backlinks to ensure your site’s credibility and search engine ranking. Enter Lÿnх—a powerful and highly efficient backlink verification tool designed to streamline this critical process. Developed by K0NxT3D, a leader and pioneer in today’s latest web technologies, Lÿnх is software you can rely on, offering both a CLI (Command-Line Interface) version and a Web UI version for varied use cases.

What Does Lÿnх Do?

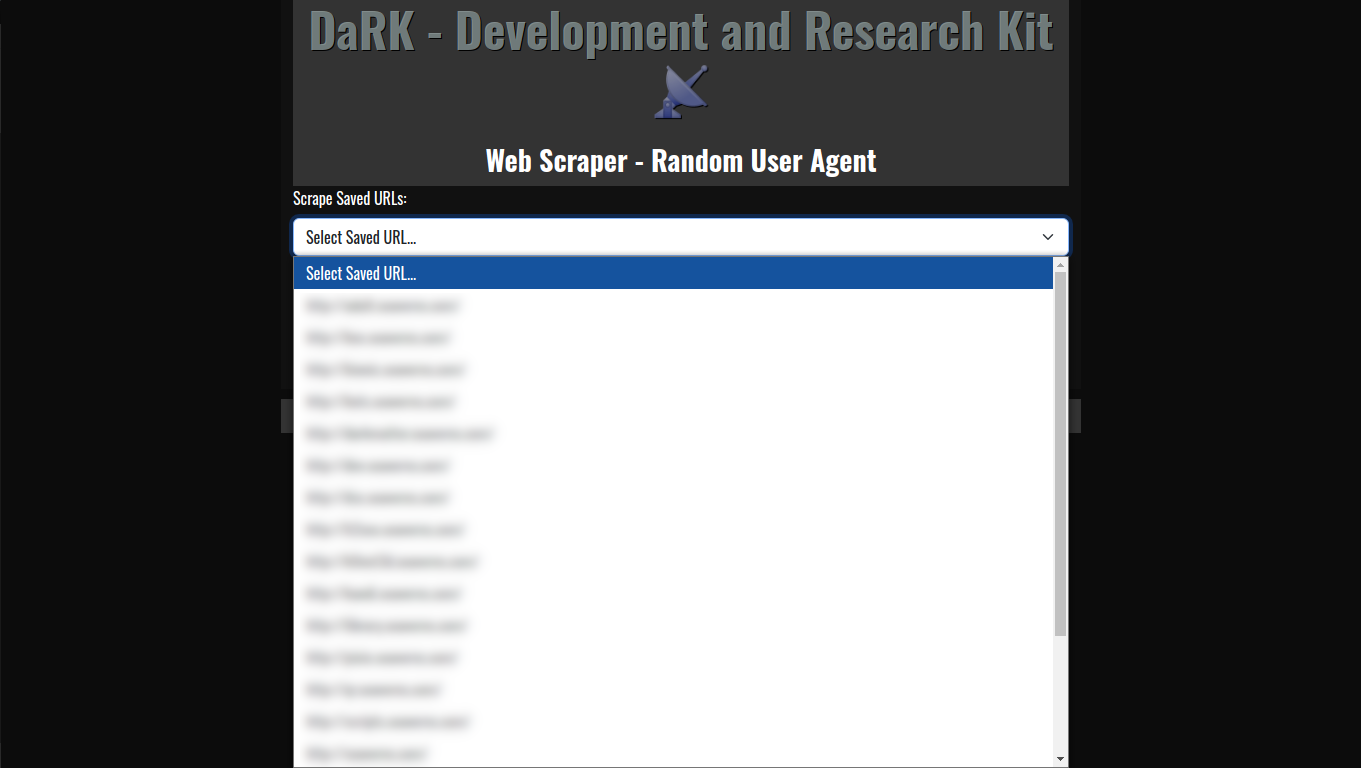

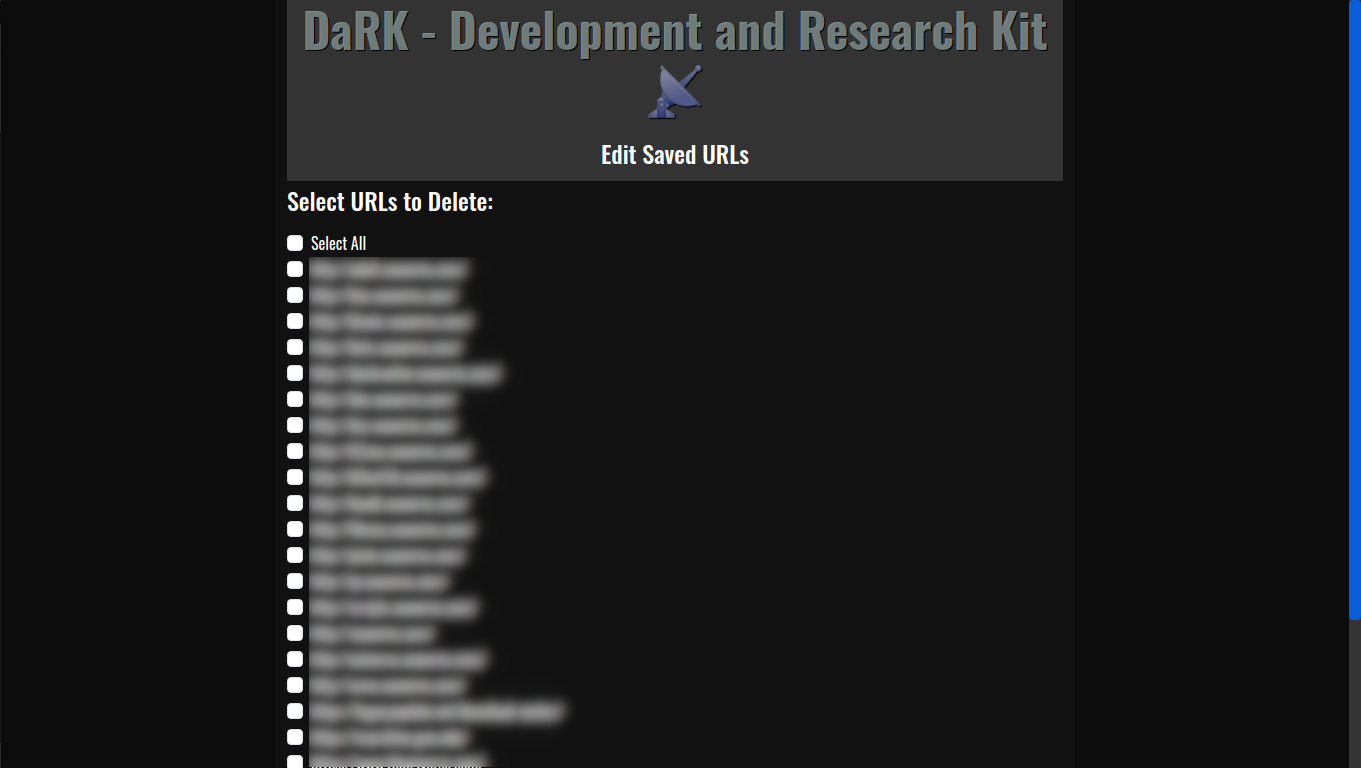

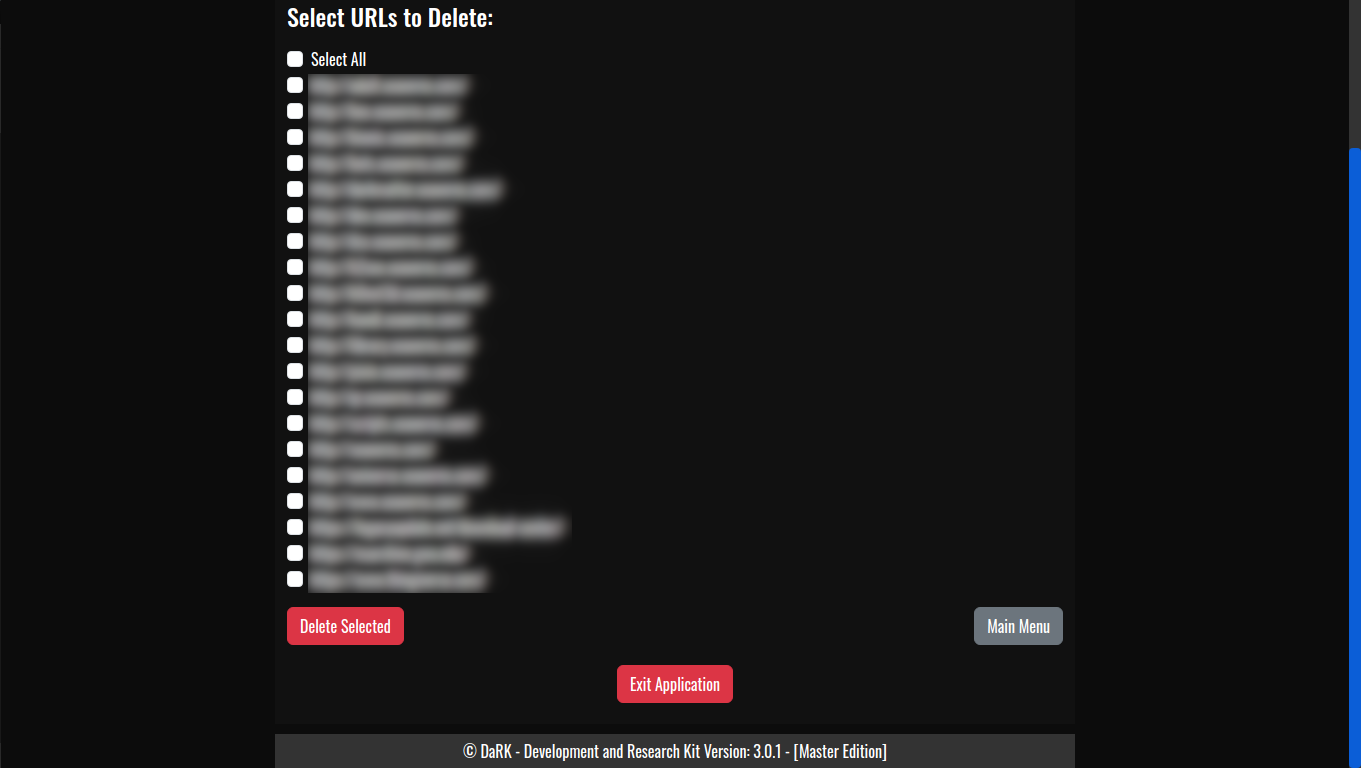

Lÿnх is a versatile tool aimed at web developers, SEOs, and site administrators who need to verify backlinks. A backlink is any hyperlink that directs a user from one website to another, and its verification ensures that links are valid, live, and properly pointing to the intended destination. Lÿnх’s core function is to efficiently scan or “Scrape” a website’s backlinks and validate their existence and correctness, ensuring that they are not broken or pointing to the wrong page.

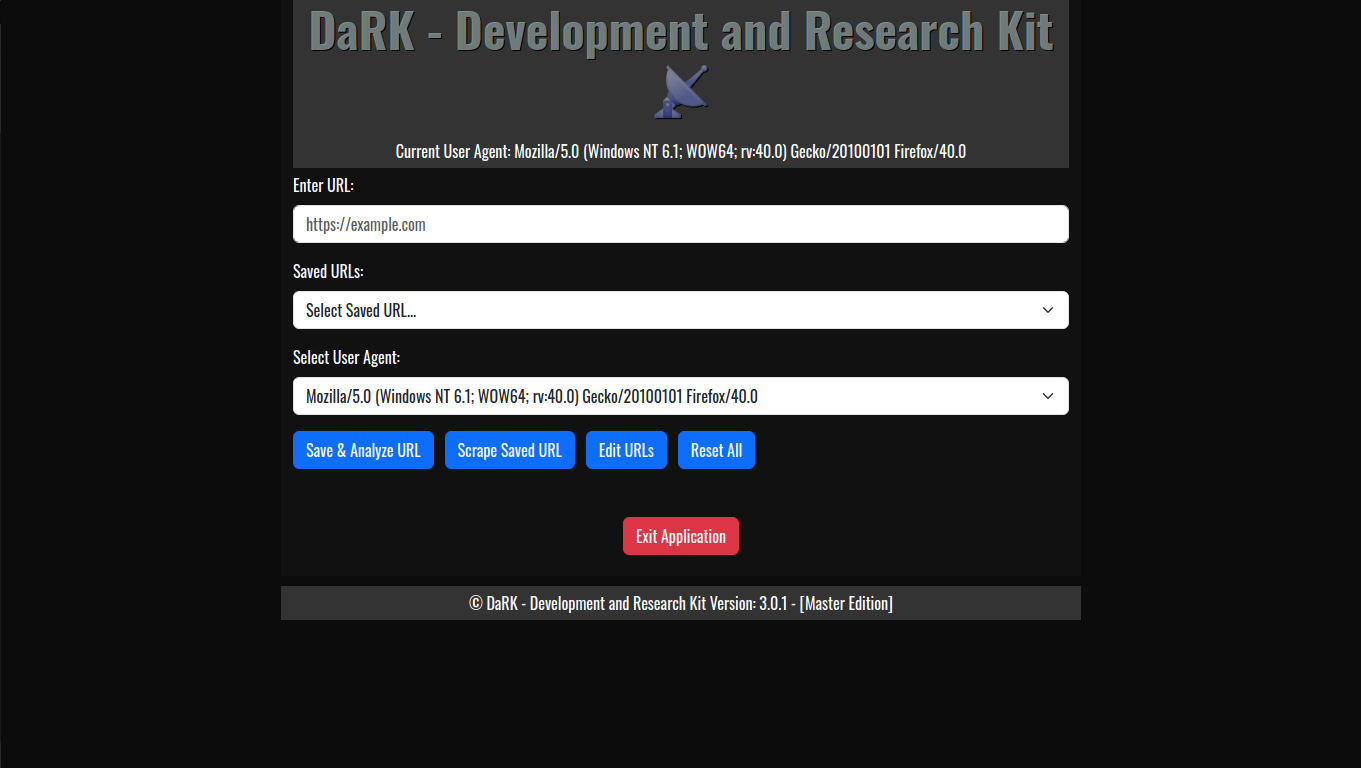

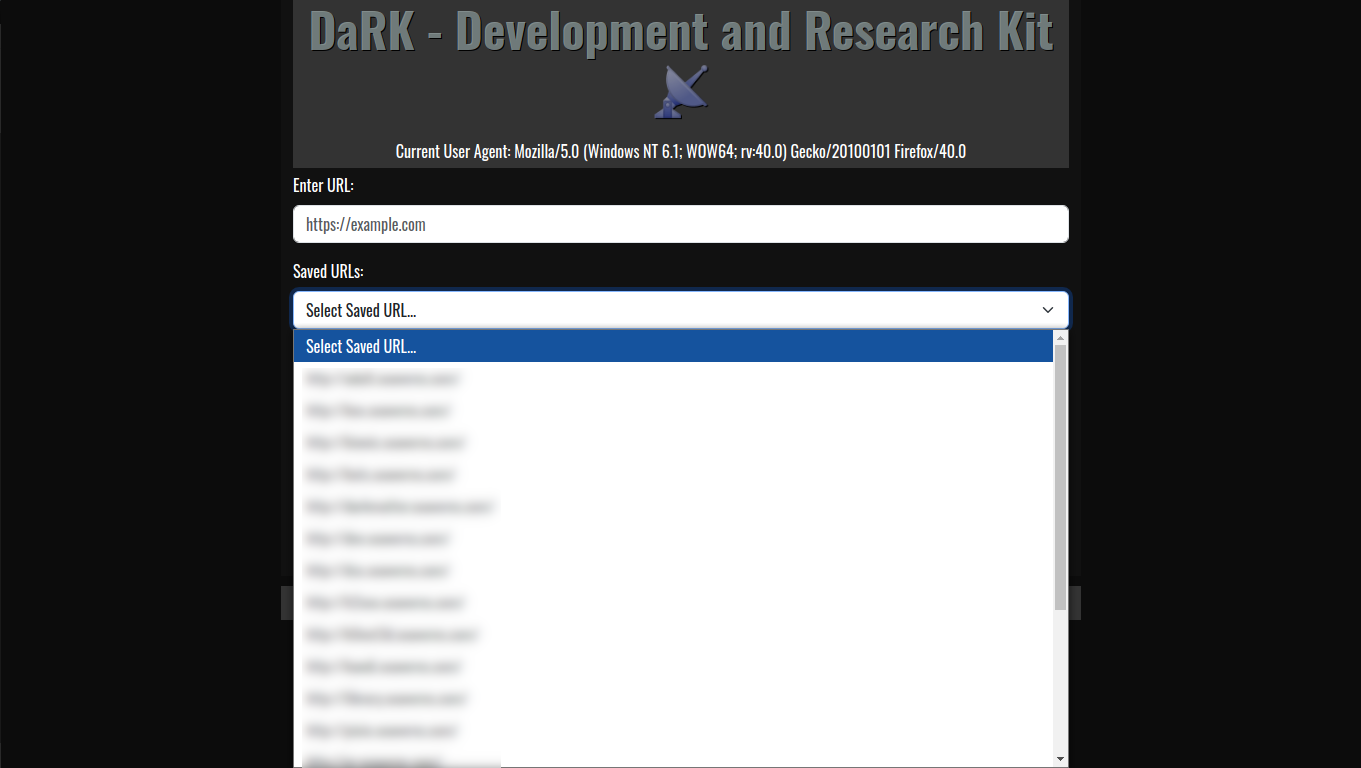

Lÿnх Backlink Verification Utility

Lÿnх Backlink Verification Utility

Why Should You Use Lÿnх?

For any website owner or developer, managing backlinks is crucial for maintaining strong SEO. Broken links can damage a website’s credibility, affect search engine rankings, and worsen user experience. Lÿnх eliminates these concerns by providing a fast and effective solution for backlink verification. Whether you’re optimizing an existing site or conducting routine checks, Lÿnх ensures your backlinks are always in top shape.

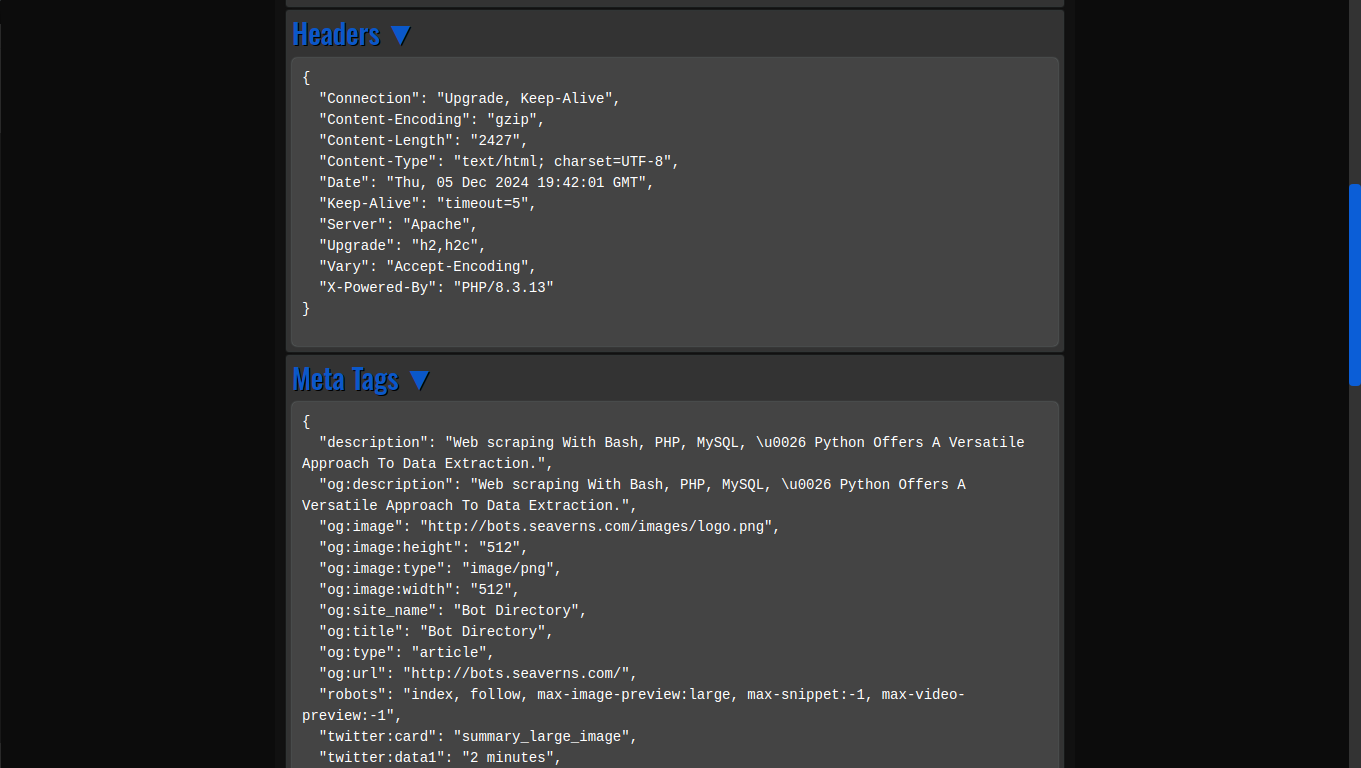

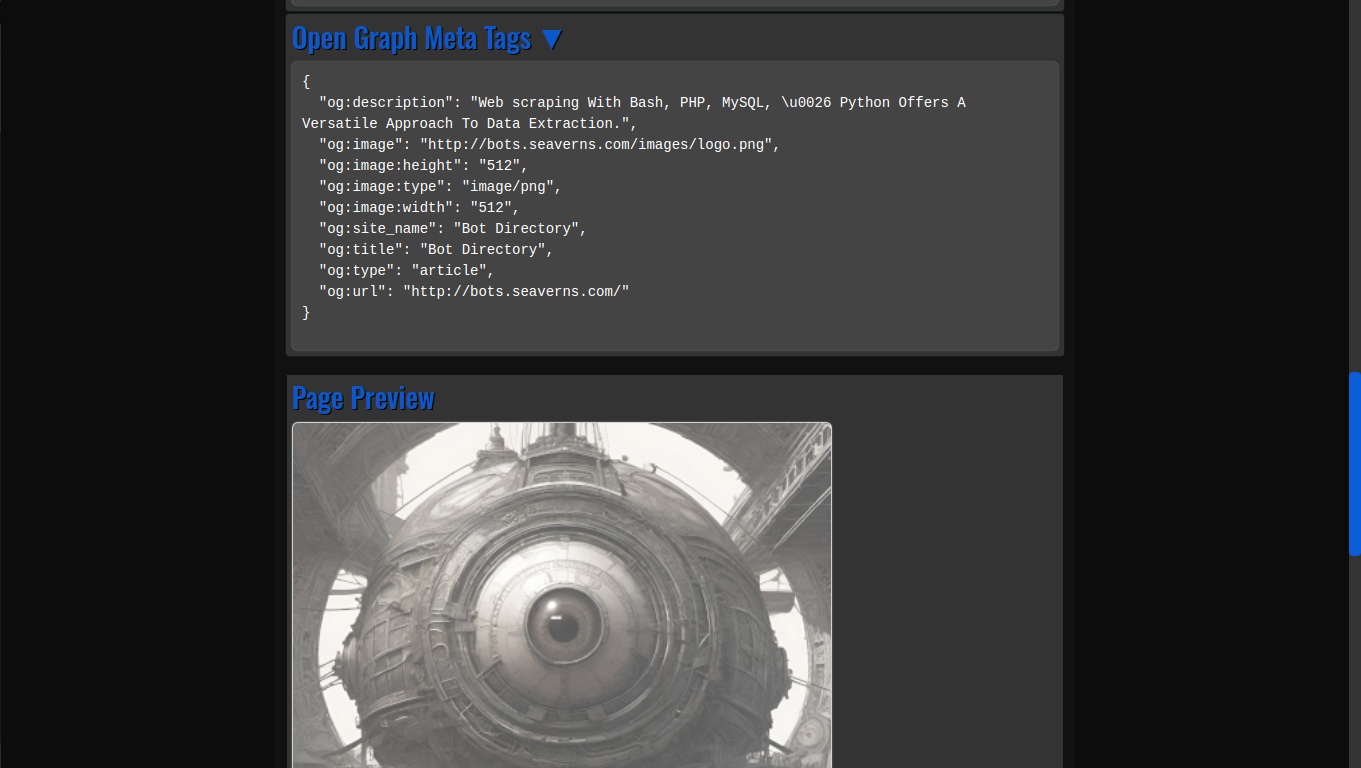

The Technology Behind Lÿnх

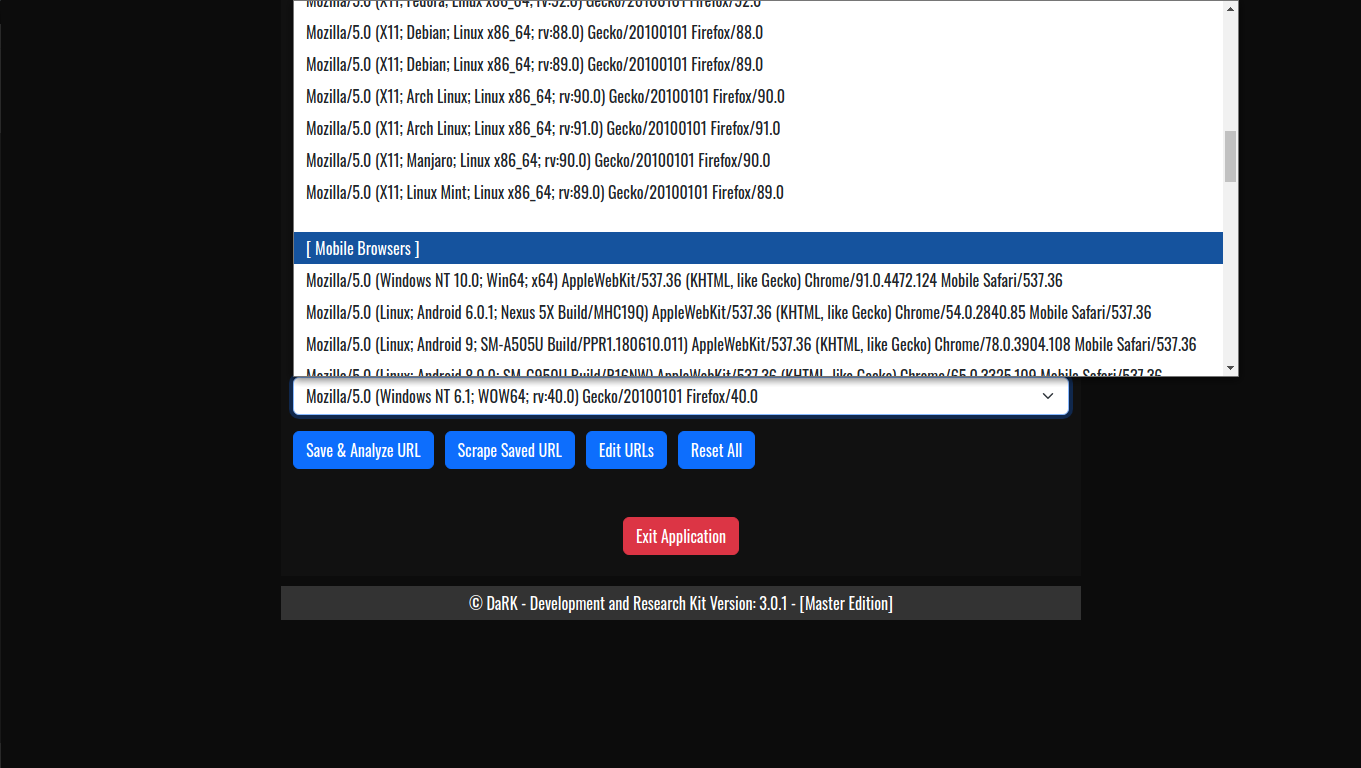

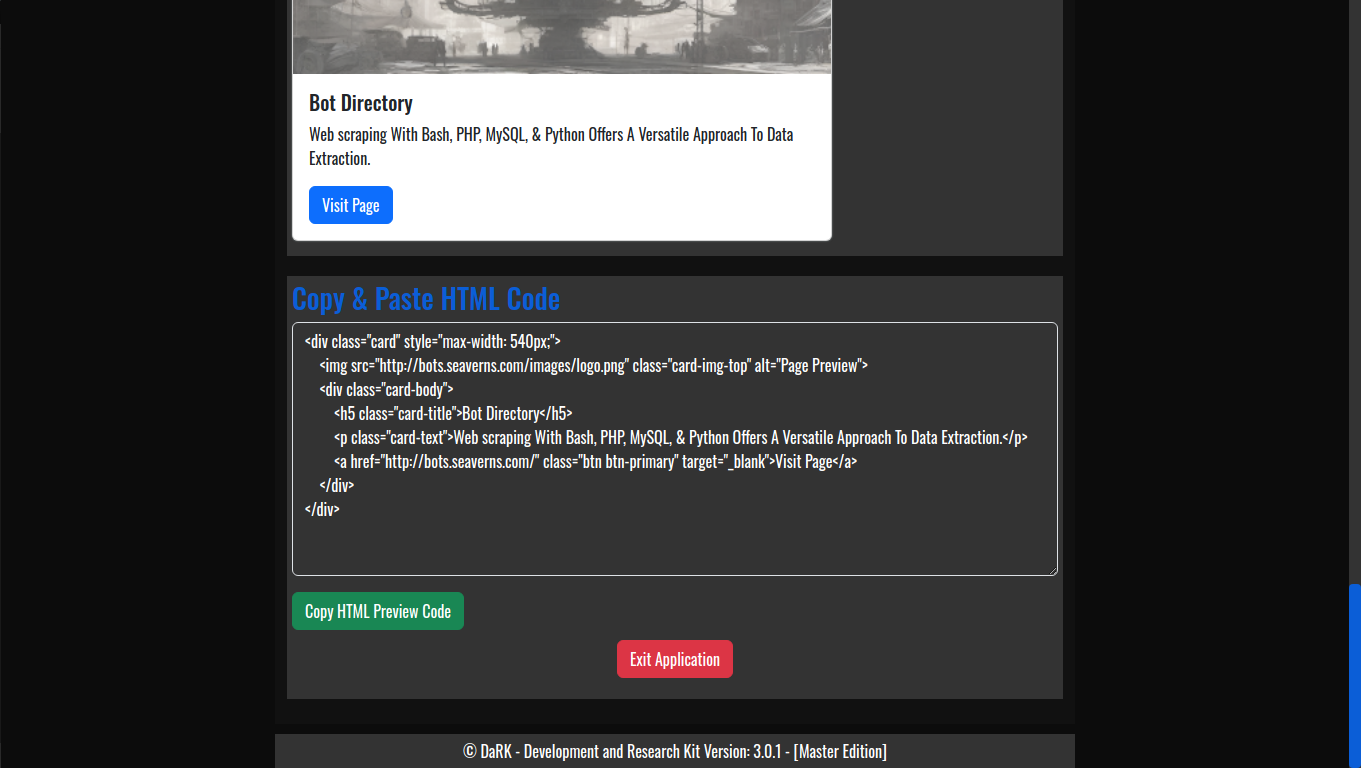

Lÿnх employs cutting-edge web technologies for data processing and parsing. Built on a highly efficient parsing engine, it processes large amounts of data at lightning speed, scanning each link to ensure it’s valid. The CLI version (Lÿnх 1.0) operates through straightforward commands, perfect for automation in server-side environments, while Lÿnх 1.2 Web UI version offers a clean, user-friendly interface for more interactive and accessible verification.

The tool integrates seamlessly into your web development workflow, parsing HTML documents, extracting backlinks, and checking their status. Its low resource usage and high processing speed make it ideal for both small websites and large-scale applications with numerous backlinks to verify.

Lÿnх Backlink Verification Utility – Efficiency and Speed

Lÿnх is designed with performance in mind. Its lightweight architecture allows it to quickly scan even the most extensive lists of backlinks without overloading servers or consuming unnecessary resources. The CLI version is especially fast, offering a no-nonsense approach to backlink verification that can run on virtually any server or local machine. Meanwhile, the Web UI version maintains speed without compromising on ease of use.

Why Lÿnх is Essential for Web Development

In the competitive world of web development and SEO, ensuring the integrity of backlinks is crucial for success. Lÿnх provides a reliable, high-speed solution that not only verifies links but helps you maintain a clean and efficient website. Whether you’re a freelance developer, part of an agency, or managing your own site, Lÿnх’s intuitive tools offer unmatched utility. With K0NxT3D’s expertise behind it, Lÿnх is the trusted choice for anyone serious about web development and SEO.

Lÿnх Backlink Verification Utility

Lÿnх is more than just a backlink verification tool; it’s an essential component for anyone looking to maintain a high-performing website. With its high efficiency, speed, and powerful functionality, Lÿnх continues to lead the way in backlink management, backed by the expertise of K0NxT3D.