Web Scraping Basics

Web Scraping Basics:

Understanding the World of Scrapers

Web scraping basics refer to the fundamental techniques and tools used to extract data from websites. This powerful process enables users to gather large amounts of data automatically from the internet, transforming unstructured content into structured formats for analysis, research, or use in various applications.

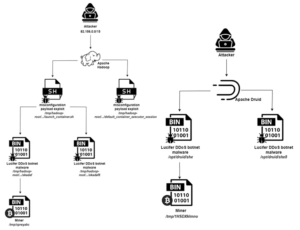

At its core, web scraping involves sending an HTTP request to a website, downloading the page, and then parsing the HTML to extract useful information. The extracted data can range from text and images to links and tables. Popular programming languages like Python, along with libraries like BeautifulSoup, Scrapy, and Selenium, are often used to build scrapers that automate this process.

The importance of web scraping basics lies in its ability to collect data from numerous sources efficiently. Businesses, data scientists, marketers, and researchers rely on scraping to gather competitive intelligence, track market trends, scrape product details, and monitor changes across websites.

However, web scraping is not without its challenges. Websites often use anti-scraping technologies like CAPTCHAs, rate-limiting, or IP blocking to prevent unauthorized scraping. To overcome these hurdles, scrapers employ techniques like rotating IPs, using proxies, and simulating human-like browsing behavior to avoid detection.

Understanding the ethical and legal implications of web scraping is equally important. Many websites have terms of service that prohibit scraping, and violating these terms can lead to legal consequences. It’s crucial to always respect website policies and use scraping responsibly.

In conclusion, web scraping basics provide the foundation for harnessing the power of automated data extraction. By mastering the techniques and tools involved, you can unlock valuable insights from vast amounts of online data, all while navigating the challenges and ethical considerations in the world of scrapers.

Web Scraping Basics:

Best Resources for Learning Web Scraping

Web scraping is a popular topic, and there are many excellent resources available for learning. Here are some of the best places where you can find comprehensive and high-quality resources on web scraping:

1. Online Courses

- Udemy:

- “Web Scraping with Python” by Andrei Neagoie: Covers Python libraries like BeautifulSoup, Selenium, and requests.

- “Python Web Scraping” by Jose Portilla: A complete beginner’s guide to web scraping.

- Coursera:

- “Data Science and Python for Web Scraping”: This course provides a great mix of Python and web scraping with practical applications.

- edX:

- Many universities, like Harvard and MIT, offer courses that include web scraping topics, especially related to data science.

2. Books

- “Web Scraping with Python” by Ryan Mitchell: This is one of the best books for beginners and intermediates, providing in-depth tutorials using popular libraries like BeautifulSoup, Scrapy, and Selenium.

- “Python for Data Analysis” by Wes McKinney: Although it’s primarily about data analysis, it includes sections on web scraping using Python.

- “Automate the Boring Stuff with Python” by Al Sweigart: A beginner-friendly book that includes a great section on web scraping.

3. Websites & Tutorials

- Real Python:

- Offers high-quality tutorials on web scraping with Python, including articles on using BeautifulSoup, Scrapy, and Selenium.

- Scrapy Documentation: Scrapy is one of the most powerful frameworks for web scraping, and its documentation provides a step-by-step guide to getting started.

- BeautifulSoup Documentation: BeautifulSoup is one of the most widely used libraries, and its documentation has plenty of examples to follow.

- Python Requests Library: The Requests library is essential for making HTTP requests, and its documentation has clear, concise examples.

4. YouTube Channels

- Tech with Tim: Offers great beginner tutorials on Python and web scraping.

- Code Bullet: Focuses on programming projects, including some that involve web scraping.

- Sentdex: Sentdex has a great web scraping series that covers tools like BeautifulSoup and Selenium.

5. Community Forums

- Stack Overflow: There’s a large community of web scraping experts here. You can find answers to almost any problem related to web scraping.

- Reddit – r/webscraping: A community dedicated to web scraping with discussions, tips, and resources.

- GitHub: There are many open-source web scraping projects on GitHub that you can explore for reference or use.

6. Tools and Libraries

- BeautifulSoup (Python): One of the most popular libraries for HTML parsing. It’s easy to use and great for beginners.

- Scrapy (Python): A more advanced, powerful framework for large-scale web scraping. Scrapy is excellent for handling complex scraping tasks.

- Selenium (Python/JavaScript): Primarily used for automating browsers. Selenium is great for scraping dynamic websites (like those that use JavaScript heavily).

- Puppeteer (JavaScript): If you’re working in JavaScript, Puppeteer is a great choice for scraping dynamic content.

7. Web Scraping Blogs

- Scrapinghub Blog: Articles on best practices, tutorials, and new scraping techniques using Scrapy and other tools.

- Dataquest Blog: Offers tutorials and guides that include web scraping for data science projects.

- Towards Data Science: This Medium publication regularly features web scraping tutorials with Python and other languages.

8. Legal and Ethical Considerations

- It’s important to understand the ethical and legal aspects of web scraping. Resources on this topic include:

- Scrapinghub Legal Section: Includes guides and articles on the legal implications of web scraping.

- Legal Risks of Web Scraping (Blog): Discusses the dos and don’ts of web scraping to ensure you are compliant with laws like GDPR and site-specific Terms of Service.

9. Practice Sites

- Web Scraper.io: A web scraping tool that also offers tutorials and practice datasets.

- BeautifulSoup Practice: Hands-on exercises specifically for web scraping.

- Scrapingbee: Provides an API for scraping websites and a blog with tutorials.

With these resources, you should be able to build a solid foundation in web scraping and advance to more complex tasks as you become more experienced.