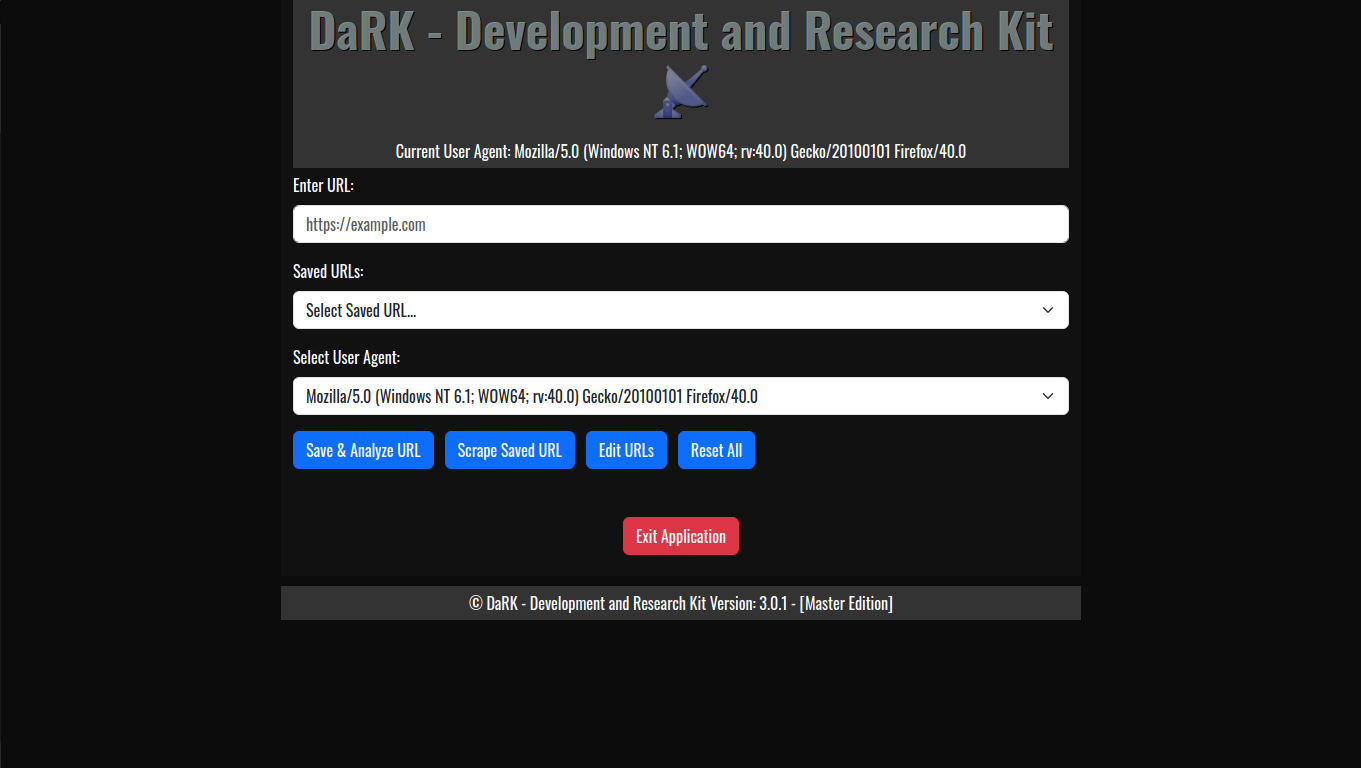

FreeDDNS – A Dynamic DNS Solution for Everyone

FreeDDNS: A Dynamic DNS Solution for Everyone

Dynamic DNS (DDNS) is a service that automatically updates the IP address associated with a domain name when the IP address changes. This is particularly useful for devices with dynamic IP addresses, such as home routers or servers, where the IP address is not static and can change frequently. Without DDNS, accessing these devices remotely would require manually updating the IP address each time it changes, which is impractical.

What is FreeDDNS?

FreeDDNS is a cost-effective, self-hosted Dynamic DNS solution designed to provide users with a reliable way to map a domain name to a dynamic IP address without relying on third-party services. Unlike traditional DDNS services that often come with subscription fees or limitations, FreeDDNS empowers users to create their own DDNS system using simple PHP scripts and a web server.

How FreeDDNS Works

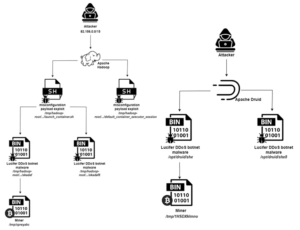

The FreeDDNS project consists of three core scripts:

- fddns.php: This script runs on the local machine and sends periodic requests to a remote server. It includes the local machine’s hostname in the request, allowing the remote server to identify and log the client’s IP address.

- access.php: This script runs on the remote server and logs the client’s IP address and hostname. It ensures that the latest IP address is always recorded in a log file (

fddns.log). - index.php: This script fetches the logged IP address and hostname from

fddns.logand uses it to retrieve and display web content from the client’s machine.

The process is simple:

- The local machine sends its hostname and IP address to the remote server.

- The remote server logs this information.

- When accessed, the remote server uses the logged IP address to fetch content from the local machine, effectively creating a dynamic link between the domain name and the changing IP address.

Why Use FreeDDNS?

- Cost-Effective: FreeDDNS eliminates the need for paid DDNS services, saving you money.

- Customizable: Since it’s self-hosted, you have full control over the system and can tailor it to your needs.

- Reliable: By using simple PHP scripts and a web server, FreeDDNS ensures a lightweight and efficient solution.

- Easy to Implement: The scripts are straightforward and can be set up in minutes, even by users with minimal technical expertise.

FreeDDNS is the perfect solution for anyone looking to access their home network, personal server, or IoT devices remotely without the hassle of manual IP updates or expensive subscriptions. Whether you’re a tech enthusiast, a small business owner, or a hobbyist, FreeDDNS offers a reliable, customizable, and cost-effective way to stay connected. Take control of your dynamic IP challenges today with FreeDDNS—your gateway to seamless remote access.

FreeDDNS (Beta) 1.9kb

Download