The Rise of AI-Generated Spam on Facebook: Current Issues and Trends

Over the past few days, Facebook has faced a notable increase in spam activity driven by AI-generated content. These posts, often featuring surreal or hyper-realistic images, are part of a coordinated effort by spammers to exploit the platform’s algorithms for financial gain. Here’s a breakdown of the situation and its implications:

What’s Happening?

- AI-Generated Images: Spam pages are flooding Facebook with AI-crafted images, ranging from bizarre art to visually stunning but nonsensical content. A notable example includes viral images of statues made from unusual materials, such as “Jesus made of shrimp”.

- Amplification by Facebook Algorithms: These posts gain traction due to Facebook’s “Suggested for You” feature, which promotes posts based on engagement patterns rather than user preferences. When users interact with these posts—even unintentionally—the algorithm further boosts their visibility.

- Monetary Motives: Many spam pages link to external ad-heavy or dropshipping sites in the comments, monetizing the engagement from these viral posts. Some pages even invest in Facebook ads to amplify their reach, complicating the platform’s efforts to detect and mitigate such content.

- Global Scale: The spam campaigns are widespread, with some pages managing hundreds of millions of interactions collectively. This level of engagement highlights the challenge of moderating such content at scale.

Facebook’s Response

Meta (Facebook’s parent company) has acknowledged the issue and pledged to improve transparency by labeling AI-generated content. This move comes after similar concerns about misinformation and malicious AI use on the platform. However, critics argue that Facebook’s reliance on automated moderation tools may not be enough to counter the evolving tactics of spammers.

Broader Implications

- Erosion of Trust: As AI-generated spam becomes more prevalent, users may find it increasingly difficult to discern authentic content from manipulated posts.

- Algorithmic Loopholes: The incident underscores the potential vulnerabilities in content recommendation systems, which can inadvertently amplify harmful or deceptive material.

- Economic and Security Risks: The monetization of these schemes often involves redirecting users to risky sites, posing both financial and cybersecurity threats.

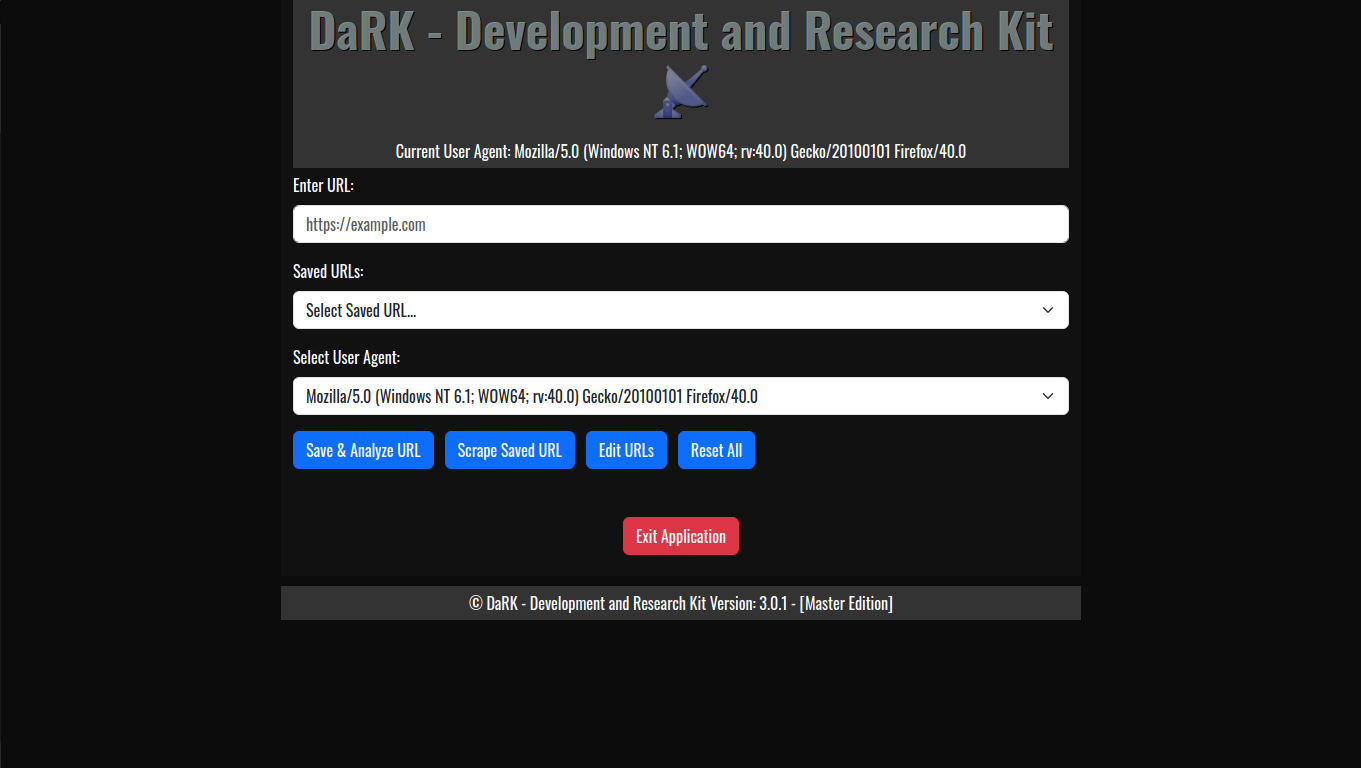

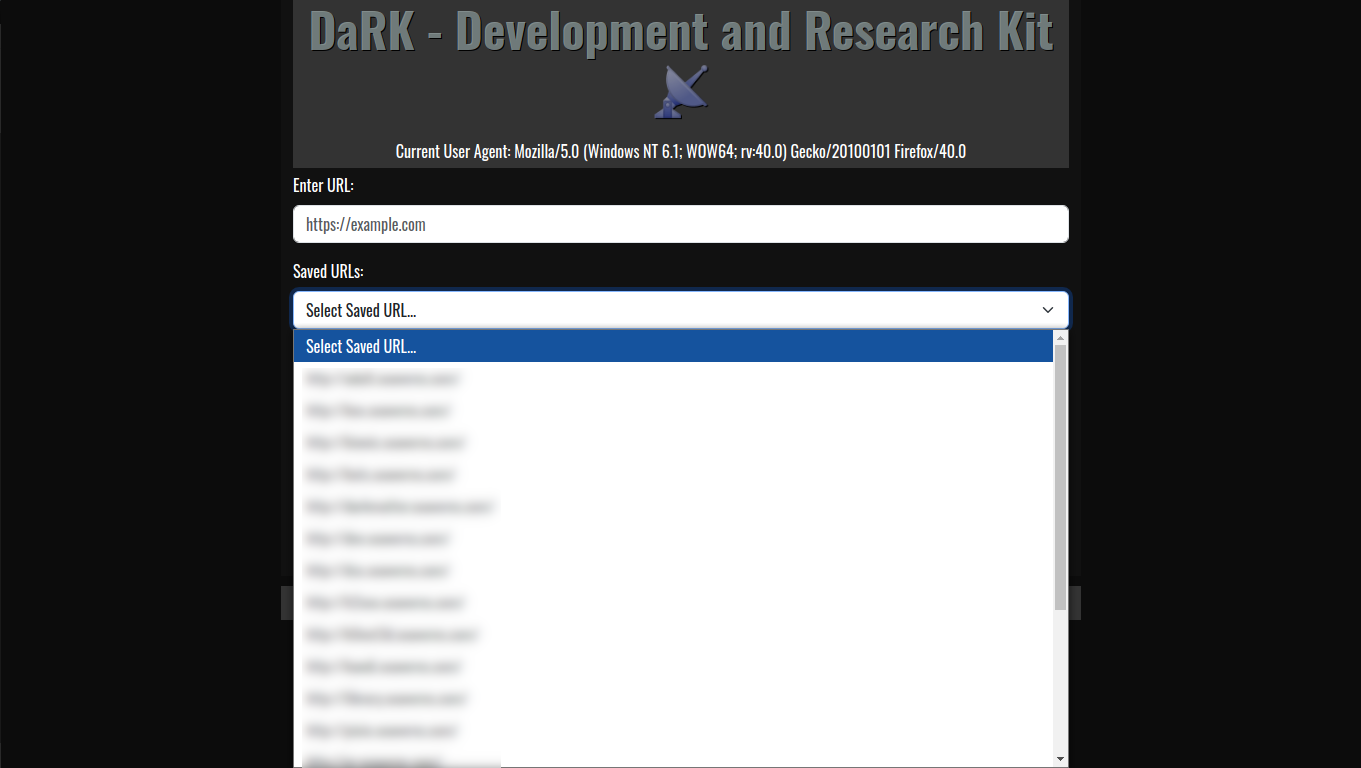

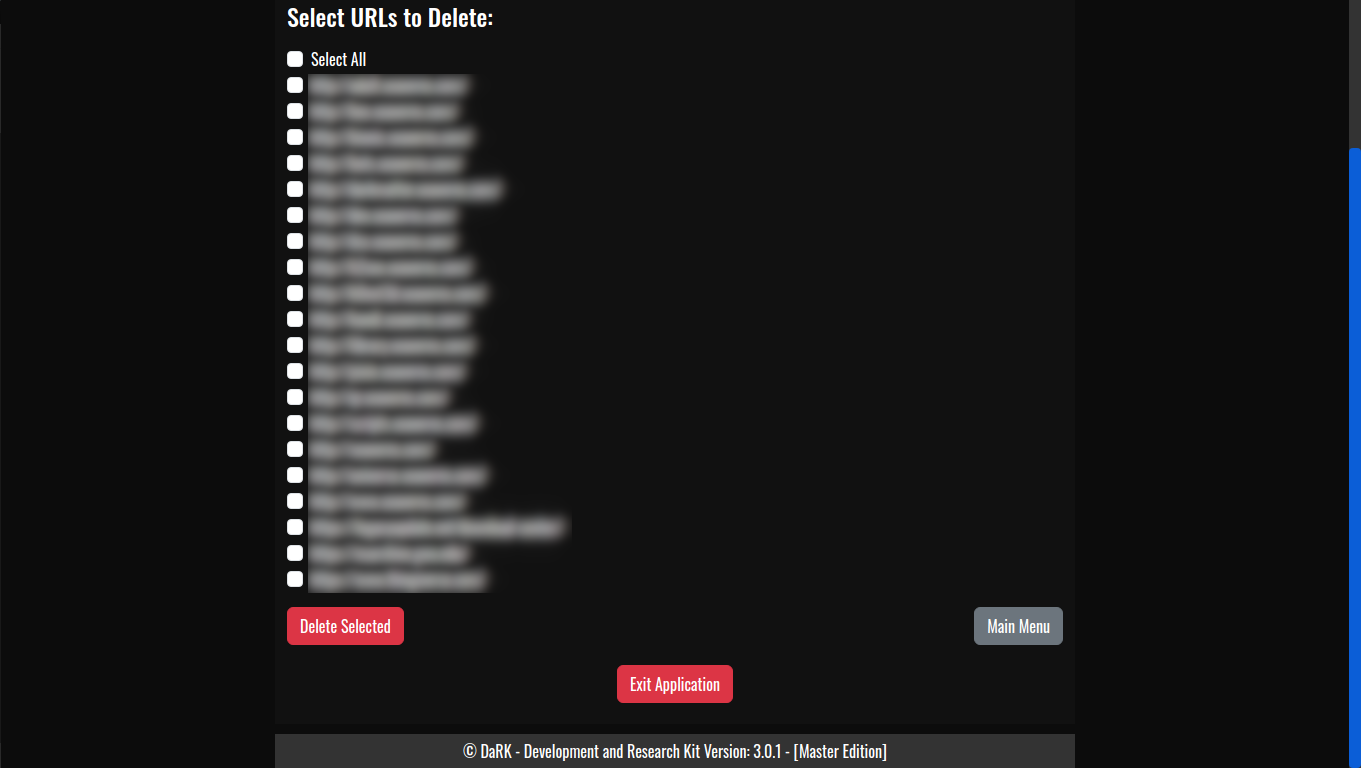

The current surge in spam ads on Facebook is primarily linked to bot farms and automation tools that exploit the platform for fake engagement. These bots are not only designed to spread irrelevant ads but also to generate fake clicks, skew ad analytics, and disrupt genuine user experiences. Recent incidents indicate that these ad bots are part of larger operations targeting platforms like Facebook, Instagram, and others.

Two categories of bots dominate Facebook spamming:

- Automated Bots: These are simpler systems designed to mass-produce accounts and post repetitive ads. Facebook’s AI can often detect and block these quickly, but the sheer volume still creates noise.

- Manual or Sophisticated Bots: These accounts mimic real user behavior, making them harder to detect. They are often used for more strategic ad campaigns, spreading disinformation or promoting scams.

Historically, operations like Boostgram and Instant-Fans.com have been known to utilize such bot networks, targeting users with fake engagement across multiple platforms, including Facebook. Meta (Facebook’s parent company) regularly takes legal action against such entities, but many adapt and persist.

Additionally, bot farms often consist of thousands of fake accounts designed to interact with ads, affecting advertiser metrics and budgets. Facebook reports significant efforts in removing fake accounts, claiming millions blocked quarterly, but challenges remain with sophisticated bots bypassing detection.

If you’re seeing increased spam, it might be part of a broader effort by these bot operators to exploit Facebook’s ad systems or test new evasion techniques. Users and advertisers are encouraged to report suspicious activity and remain cautious about ad engagement.

Bot farms are large-scale operations leveraging networks of automated programs to execute repetitive digital tasks for malicious purposes. These include manipulating financial markets, inflating ad metrics, and engaging in cyber fraud. Bot farms often consist of numerous servers, diverse IP address pools, and highly advanced scripts to evade detection, allowing them to operate at scale and with precision.

In financial markets, bots can exacerbate volatility by executing coordinated trades, such as artificial inflation schemes (pump-and-dump) or high-frequency trades to disrupt normal market behavior. These actions mislead investors, distort pricing mechanisms, and can destabilize entire markets, especially during periods of economic uncertainty. Such disruptions are not limited to legitimate trading but also extend to platforms reliant on algorithmic responses, creating widespread ripple effects.

Economically, these bot-driven disruptions cause substantial financial losses, costing industries billions annually. For example, fraudulent advertising metrics waste business resources while masking true engagement. High-profile operations like Methbot exploited hundreds of thousands of fake IP addresses, generating fraudulent ad revenue on a massive scale and undermining trust in digital advertising ecosystems.

Efforts to mitigate the impact of bot farms include deploying machine learning models to identify anomalous behavior, monitoring for IP spoofing, and implementing stronger authentication methods. However, as bot technology continues to evolve, combating their influence requires ongoing innovation, stricter regulations, and global collaboration to protect financial and digital ecosystems from systemic risks.

Current Events and Developments

- Meta’s AI Transparency Push: Meta has committed to labeling AI-generated images across its platforms, aiming to curtail the spread of manipulated content and improve user awareness.

- Increased Monitoring Efforts: Researchers and watchdogs are ramping up analyses of such campaigns. For instance, studies by Stanford and Georgetown have documented hundreds of spam pages exploiting Facebook’s engagement-driven algorithms.

- User Awareness Campaigns: Public advisories are being issued, encouraging users to avoid interacting with suspicious posts and report them to Facebook for moderation.

What You Can Do

- Avoid Interactions: Refrain from liking, commenting, or sharing suspicious content.

- Report Spam: Use Facebook’s reporting tools to flag AI-generated spam posts.

- Stay Informed: Regularly update your knowledge of online scams and be cautious of external links, especially those posted in comments.

By understanding the tactics and implications of these campaigns, users can help reduce their impact while pushing platforms like Facebook to strengthen their moderation policies.